Cosine Similarity Demystified, with code

Measure similarities between textual entities

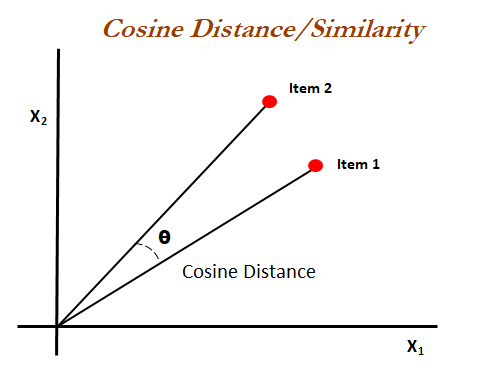

Cosine similarity is a simple metric based on the cosine distance between two objects. It can be used to measure similarities between entities. It calculates the cosine of the angle between two vectors, which ranges from 0 (no similarity) to 1 (completely similar).

In the context of recommender systems, cosine similarity is based on the similarities between different profiles based on the words that make up each profile. You can check for cosine similarity between texts based on the characters or words that make up the text. Texts with the highest number of similar words and least number of dissimilar words will have the highest similarity.

Under the hood, the words are first converted to vectors in a dimensional space, a form that the computer understands. Word vectorization has numerous and broad applications in NLP and Machine Learning. There are various types for vectorizers. Two common ones in similarity measurement are the Count and TF-IDF Vectorizer. The TF-IDF (Term Frequency-Inverse Document Frequency) is a numerical statistic that reflects the importance of a word in a document relative to a collection of documents. It is used to represent the significance of terms in a document. It gives less weight to words that are more frequent across all documents and more weight to more scarce words. The count vectorizer on the other hand measures similarity depending on the number of similar objects.

Let’s see a simple example in Python.

from sklearn.metrics.pairwise import cosine_similarity

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

docs = [

"The sun is shining brightly today.", # sun, shining, brightly, today

"Cats are playful and curious.", # cats, playful, curious

"The cats are playing in the sun." # cats, playing, sun

]

vec = TfidfVectorizer(stop_words='english').fit_transform(docs)

cos_sim = cosine_similarity(vec)

print(cos_sim)

# Output

# [[1. 0. 0.208199 ]

# [0. 1. 0.24527199]

# [0.208199 0.24527199 1. ]]

In the output above, cos_sim is a pairwise matrix of each of the sentences with each other. Notice the 1.s dimension, that represents the similarity of each sentence with itself. The comments in the documents represent the word vectors after English stop words are removed, that’s what the stop_words=’english’ parameter in the vectorizer function does. Another useful parameter is analyzer which can be set to ’char’ or ’word’. If you set it to ‘char’. It will analyze similarities between different text documents character-wise.

Check out the similarity of the third text against the first two. That corresponds to the third row of the output, [ 0.208 , 0.245 , 1. ]. The second text is more similar to the third text compared to the first despite each having one similar word, this is because the first one has more dissimilar words. Note that playful and playing have 0 similarity because the vectorizer analyzes words by default. If we were to set the analyzer parameter to ‘char’, similar characters would be accounted for.

Cosine similarity can be used in various fields. In recommendation systems, it is used to determine the similarity between user or item profiles. It can be used in text analysis, image similarity, anomaly detection, etc.

However, it has the following limitations.

Does Not Capture Semantic Meaning hence may not accurately reflect the actual similarity in meaning between text documents.

Sensitive to Vector Length. The length of a document affects the score (more dissimilar words)

Assumes Independence of Features, which is not hold true in some case.